Five papers by CSE researchers presented at USENIX Security 2023

Five papers by researchers affiliated with CSE have been accepted for presentation at the 2023 USENIX Security Symposium, one of the world’s leading conferences on security and privacy in computer systems and networks. USENIX Security, which is taking place August 9-11 in Anaheim, CA, unites researchers and practitioners from across the globe with the aim of disseminating the latest developments, advancing the field, and addressing new and ongoing challenges in security and privacy.

The papers authored by CSE researchers cover a range of topics related to the enhancement of security and privacy measures in modern computing systems. Subjects include VPN performance, autonomous vehicle security, face obfuscation systems, and mobile device “shoulder-surfing,” among others.

The papers being presented at USENIX Security are as follows, with the names of CSE researchers in bold:

Network Responses to Russia’s Invasion of Ukraine in 2022: A Cautionary Tale for Internet Freedom

Reethika Ramesh, Ram Sundara Raman, Apurva Virkud, Alexandra Dirksen, Armin Huremagic, David Fifield, Dirk Rodenburg, Rod Hynes, Doug Madory, Roya Ensafi

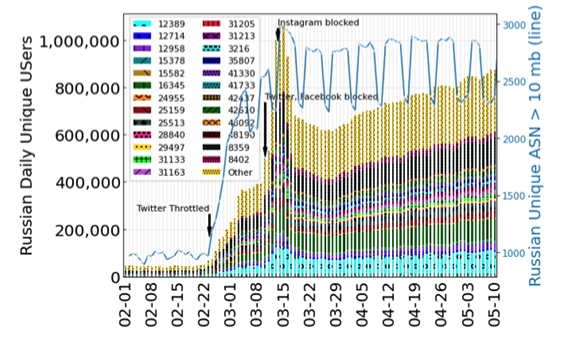

Abstract: Russia’s invasion of Ukraine in February 2022 was followed by sanctions and restrictions: by Russia against its citizens, by Russia against the world, and by foreign actors against Russia. Reports suggested a torrent of increased censorship, geoblocking, and network events affecting Internet freedom.

This paper is an investigation into the network changes that occurred in the weeks following this escalation of hostilities. It is the result of a rapid mobilization of researchers and activists, examining the problem from multiple perspectives. We develop GeoInspector, and conduct measurements to identify different types of geoblocking, and synthesize data from nine independent data sources to understand and describe various network changes. Immediately after the invasion, more than 45% of Russian government domains tested blocked access from countries other than Russia and Kazakhstan; conversely, 444 foreign websites, including news and educational domains, geoblocked Russian users. We find significant increases in Russian censorship, especially of news and social media. We find evidence of the use of BGP withdrawals to implement restrictions, and we quantify the use of a new domestic certificate authority. Finally, we analyze data from circumvention tools, and investigate their usage and blocking. We hope that our findings showing the rapidly shifting landscape of Internet splintering serves as a cautionary tale, and encourages research and efforts to protect Internet freedom.

“All of them claim to be the best”: Multi-perspective study of VPN users and VPN providers

Reethika Ramesh, Anjali Vyas, Roya Ensafi

Abstract: As more users adopt VPNs for a variety of reasons, it is important to develop empirical knowledge of their needs and mental models of what a VPN offers. Moreover, studying VPN users alone is not enough because, by using a VPN, a user essentially transfers trust, say from their network provider, onto the VPN provider. To that end, we are the first to study the VPN ecosystem from both the users’ and the providers’ perspectives. In this paper, we conduct a quantitative survey of 1,252 VPN users in the U.S. and qualitative interviews of nine providers to answer several research questions regarding the motivations, needs, threat model, and mental model of users, and the key challenges and insights from VPN providers. We create novel insights by augmenting our multi-perspective results, and highlight cases where the user and provider perspectives are misaligned. Alarmingly, we find that users rely on and trust VPN review sites, but VPN providers shed light on how these sites are mostly motivated by money. Worryingly, we find that users have flawed mental models about the protection VPNs provide, and about data collected by VPNs. We present actionable recommendations for technologists and security and privacy advocates by identifying potential areas on which to focus efforts and improve the VPN ecosystem.

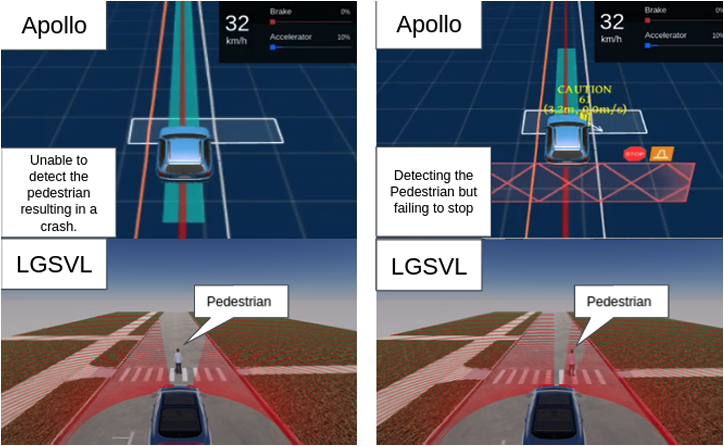

You Can’t See Me: Physical Removal Attacks on LiDAR-based Autonomous Vehicles Driving Frameworks

Yulong Cao, S. Hrushikesh Bhupathiraju, Pirouz Naghavi, Takeshi Sugawara, Z. Morley Mao, Sara Rampazzi

Abstract: Autonomous Vehicles (AVs) increasingly use LiDAR-based object detection systems to perceive other vehicles and pedestrians on the road. While existing attacks on LiDAR-based autonomous driving architectures focus on lowering the confidence score of AV object detection models to induce obstacle misdetection, our research discovers how to leverage laser-based spoofing techniques to selectively remove the LiDAR point cloud data of genuine obstacles at the sensor level before being used as input to the AV perception. The ablation of this critical LiDAR information causes autonomous driving obstacle detectors to fail to identify and locate obstacles and, consequently, induces AVs to make dangerous automatic driving decisions. In this paper, we present a method invisible to the human eye that hides objects and deceives autonomous vehicles’ obstacle detectors by exploiting inherent automatic transformation and filtering processes of LiDAR sensor data integrated with autonomous driving frameworks. We call such attacks Physical Removal Attacks (PRA), and we demonstrate their effectiveness against three popular AV obstacle detectors (Apollo, Autoware, PointPillars), and we achieve 45◦ attack capability. We evaluate the attack impact on three fusion models (Frustum-ConvNet, AVOD, and Integrated-Semantic Level Fusion) and the consequences on the driving decision using LGSVL, an industry-grade simulator. In our moving vehicle scenarios, we achieve a 92.7% success rate removing 90% of a target obstacle’s cloud points. Finally, we demonstrate the attack’s success against two popular defenses against spoofing and object hiding attacks and discuss two enhanced defense strategies to mitigate our attack.

Eye-Shield: Real-Time Protection of Mobile Device Screen Information from Shoulder Surfing

Brian Jay Tang, Kang G. Shin

Abstract: People use mobile devices ubiquitously for computing, communication, storage, web browsing, and more. As a result, the information accessed and stored within mobile devices, such as financial and health information, text messages, and emails, can often be sensitive. Despite this, people frequently use their mobile devices in public areas, becoming susceptible to a simple yet effective attack – shoulder surfing. Shoulder surfing occurs when a person near a mobile user peeks at the user’s mobile device, potentially acquiring passcodes, PINs, browsing behavior, or other personal information. We propose Eye-Shield, a solution to prevent shoulder surfers from accessing/stealing sensitive on-screen information. Eye-Shield is designed to protect all types of on-screen information in real time, without any serious impediment to users’ interactions with their mobile devices. Eye-Shield generates images that appear readable at close distances, but appear blurry or pixelated at farther distances and wider angles. It is capable of protecting on-screen information from shoulder surfers, operating in real time, and being minimally intrusive to the intended users. Eye-Shield protects images and text from shoulder surfers by reducing recognition rates to 24.24% and 15.91%. Our implementations of Eye-Shield achieved high frame rates for 1440 × 3088 screen resolutions (24 FPS for Android and 43 FPS for iOS). Eye-Shield also incurs acceptable memory usage, CPU utilization, and energy overhead. Finally, our MTurk and in-person user studies indicate that Eye-Shield protects on-screen information without a large usability cost for privacy-conscious users.

Fairness Properties of Face Recognition and Obfuscation Systems

Harrison Rosenberg, Brian Tang, Kassem Fawaz, Somesh Jha

Abstract: The proliferation of automated face recognition in the commercial and government sectors has caused significant privacy concerns for individuals. One approach to address these privacy concerns is to employ evasion attacks against the metric embedding networks powering face recognition systems: Face obfuscation systems generate imperceptibly perturbed images that cause face recognition systems to misidentify the user. Perturbed faces are generated on metric embedding networks, which are known to be unfair in the context of face recognition. A question of demographic fairness naturally follows: are there demographic disparities in face obfuscation system performance? We answer this question with an analytical and empirical exploration of recent face obfuscation systems. Metric embedding networks are found to be demographically aware: face embeddings are clustered by demographic. We show how this clustering behavior leads to reduced face obfuscation utility for faces in minority groups. An intuitive analytical model yields insight into these phenomena.

MENU

MENU